For my exploration of soft materials, I was really captured by the trash I was collecting from my week of being sick. I got a lot of takeout and that comes with many soft materials: bags, napkins, packets of goop, packets of salt… it’s a soft score!

What’s considered soft anyway? From class, we decided that soft meant: bendy, squishy, stretchy, flexibility, compressive, elastic, and deformable. Also, softness can be a factor of an items material properties or its form.

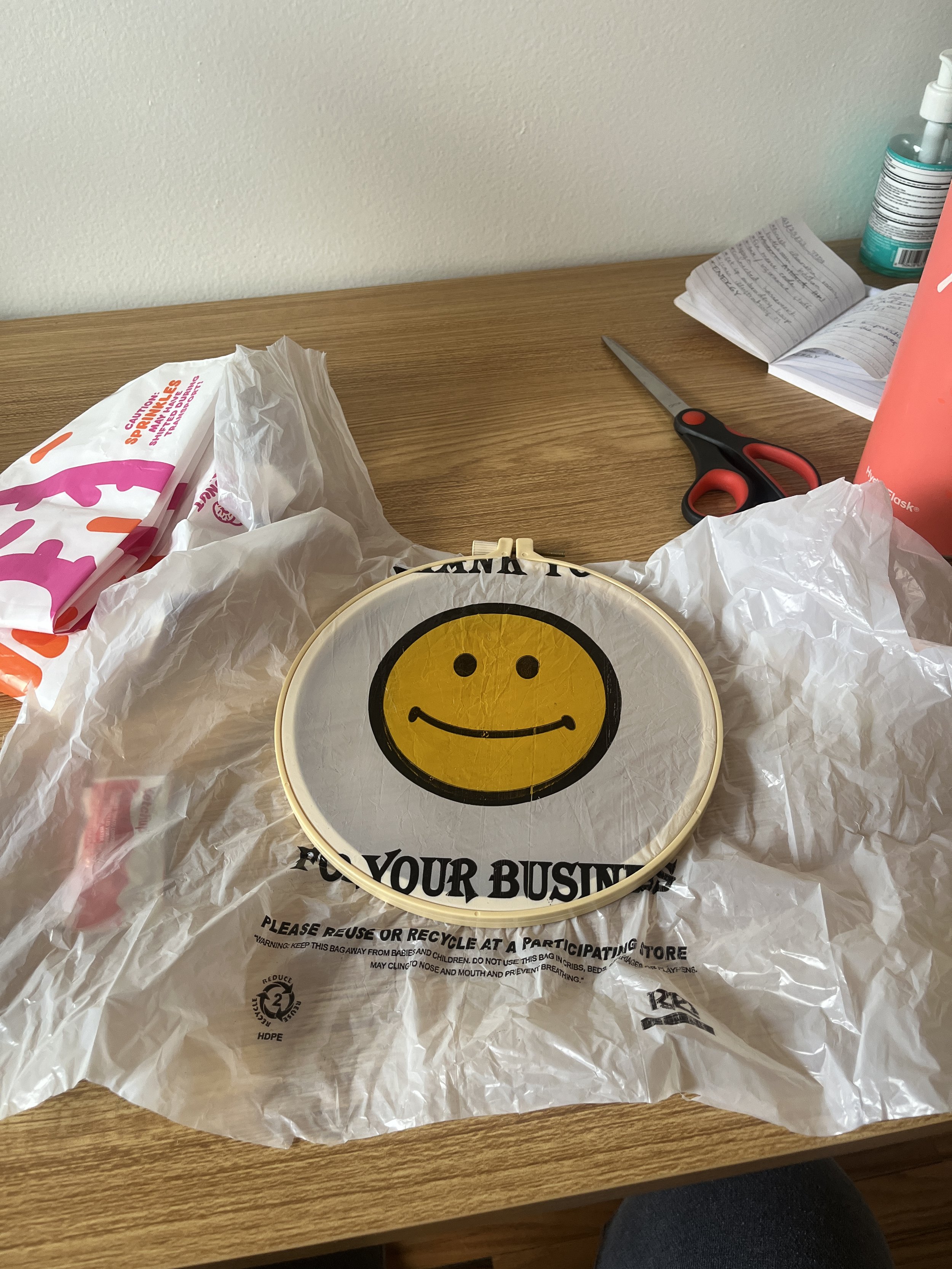

Some soft materials, and funny packaging, I collected last week.

Just holding onto these everyday materials I thought of some crazy experiments I couldn’t get out of my mind. First, can you add some embroidery to a super cute plastic bag? Answer: no.

Well, I guess, I don’t think so. I started by cutting this bag so that it was just one side with the graphics on it. You can see that the holes made by the needle didn’t hold their shape and if I try to pull the stitch tight it rips holes in the bag. I kinda want to try this again but embroidering through both sides of the bag, maybe that will give the stitch a bit more integrity?

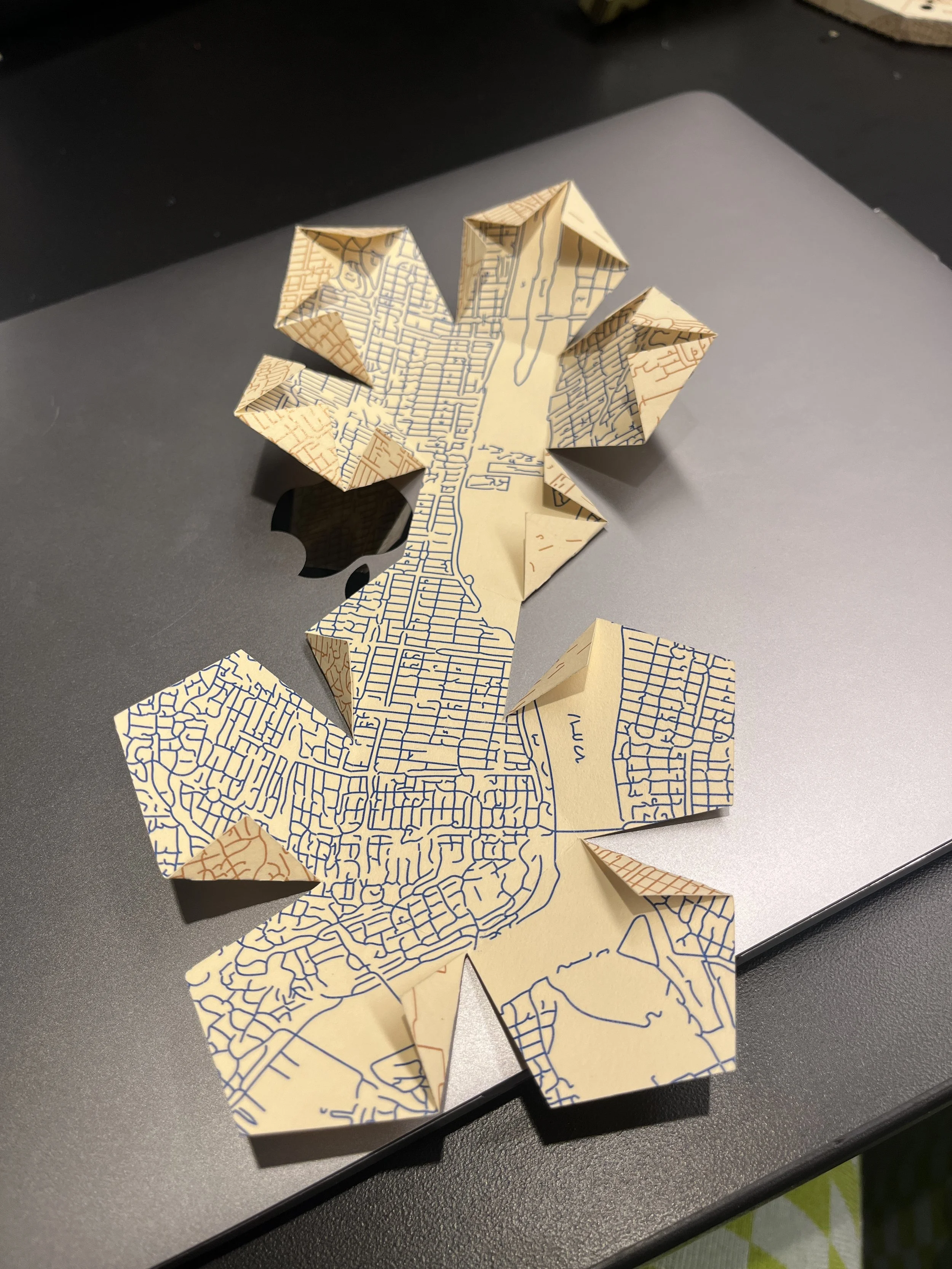

OK, this idea haunted my dreams: saucy quilt. These takeout sauce packets reminded me of my favorite toy from the first robotics class, the water wiggler. They also evoked parts of a quilt or crochet squares. It’s this reason I was bent on attaching them together with thread and needle, even though this could have ended up being a big mess if I poked a hole through the sauce part of the packet.